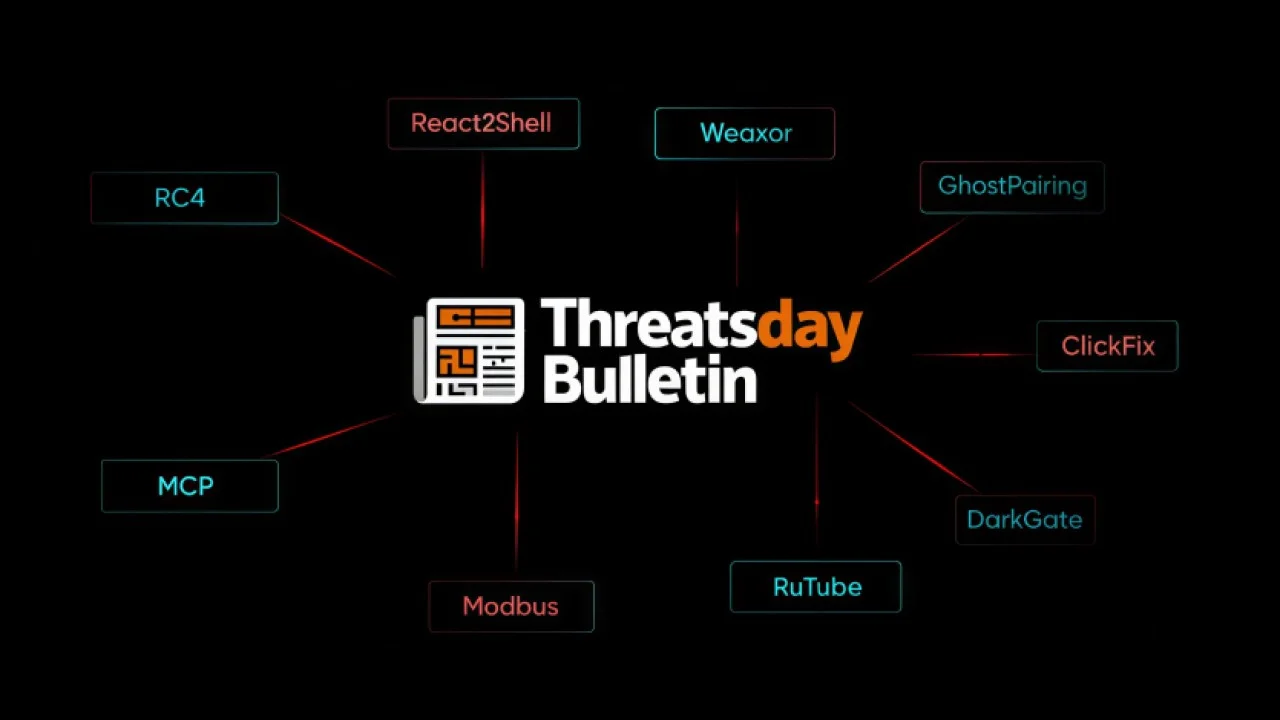

ThreatsDay Bulletin: WhatsApp Hijacks, MCP Leaks, AI Recon, React2Shell Exploit and 15 More Stories

SAN FRANCISCO (WHN) – The cybersecurity world didn’t get a moment’s rest this week. A surge of activity, from exploited web frameworks to new attack vectors targeting popular messaging apps, kept incident response teams scrambling. This isn’t just noise; it’s a clear signal that the attack surface is expanding, fueled by rapid AI integration and legacy system vulnerabilities.

This week’s bulletin highlights threats to WhatsApp, a significant leak from the MCP (Multi-Chip Package) sector, and a novel exploit targeting React applications, dubbed React2Shell. It’s a reminder that even as we deploy advanced security postures like Zero Trust, the attackers are always probing for the weakest link.

The MCP leak, details of which are still emerging, reportedly involves sensitive design documents. These Multi-Chip Packages are crucial for integrating multiple semiconductor dies into a single package, enabling higher performance and smaller form factors, especially for AI accelerators. Exposure of such data could accelerate competitor development or, worse, reveal design flaws exploitable by nation-state actors.

Meanwhile, WhatsApp users are facing a new wave of account hijacks. The mechanics aren’t entirely clear, but reports suggest a social engineering component, potentially combined with exploiting loopholes in the app’s two-factor authentication setup. This isn’t new territory for messaging apps, but the scale of this particular wave is concerning, impacting users across multiple regions.

Then there’s React2Shell, an exploit that targets vulnerabilities in how React, the popular JavaScript library for building user interfaces, handles certain server-side rendering scenarios. Developers who aren’t diligently sanitizing user inputs passed to server-side components can inadvertently open the door to remote code execution. The attack chain, according to early analysis, involves injecting malicious JavaScript that then leverages insecure deserialization or command injection flaws on the server.

The implications of React2Shell are significant. React powers a vast swathe of the modern web. A widespread vulnerability, even if it requires specific misconfigurations, could impact millions of websites and web applications. It underscores the need for developers to not only understand their framework’s capabilities but also its potential pitfalls, especially when dealing with untrusted data.

Beyond these headline-grabbing incidents, the bulletin also points to a rise in AI-powered reconnaissance. Attackers are reportedly using generative AI models to automate the scanning of networks, identify potential vulnerabilities, and even craft more convincing phishing emails. This isn’t just about speed; it’s about the sophistication of the attacks. AI can analyze vast datasets of vulnerabilities and system configurations far faster than human analysts, identifying patterns that might otherwise be missed.

The concept of “Zero Trust Everywhere,” as advocated by some security vendors, is directly challenged by these evolving threats. It’s a security model that assumes no user or device can be trusted by default, requiring strict verification for every access request. Yet, as AI becomes more deeply integrated into our infrastructure, from cloud services to endpoint devices, the attack surface for AI-specific vulnerabilities also grows.

Consider the implications for GenAI (Generative AI). While it promises to streamline workflows and unlock new creative potential, it also introduces new attack vectors. Adversarial attacks on AI models, data poisoning, and the potential for AI to generate malicious code or disinformation are all concerns that need to be addressed proactively. The MCP leak, for instance, could have direct implications if it exposes architectural weaknesses in AI chips themselves.

The sheer volume of reported incidents, over 18 in this latest bulletin, suggests a systemic issue. It’s not just about patching individual exploits; it’s about rethinking how we build and secure our digital infrastructure. The speed at which new threats emerge, often leveraging existing technologies in novel ways, requires a more dynamic and adaptable approach to cybersecurity.

The React2Shell exploit, for example, highlights how a popular, well-regarded technology like React can become a vector for attacks when not implemented with security best practices. Developers need to treat every input from a client-side application as potentially hostile, even when rendering on the server. This requires rigorous input validation and output encoding at every stage of the pipeline.

Furthermore, the MCP leak serves as a stark reminder of the critical importance of supply chain security in hardware. The silicon fabrication process is complex, and any compromise, whether through intellectual property theft or the introduction of hardware Trojans, can have far-reaching consequences. This is especially true for chips designed for AI, where the stakes for performance and security are incredibly high.

The use of AI for reconnaissance is particularly worrisome. It allows attackers to scale their operations significantly. Instead of manually probing a few systems, they can use AI to identify hundreds or thousands of potential targets and then tailor their attacks based on the specific systems they find. This pushes the need for automated security responses and more intelligent threat detection systems.

The Zero Trust model aims to mitigate this by enforcing granular access controls. But even within a Zero Trust framework, an AI-powered attacker might be able to exhaust authentication mechanisms or exploit subtle misconfigurations in policy enforcement. The interplay between AI-driven attacks and Zero Trust defenses is a critical area of research and development.

The WhatsApp hijacks, while seemingly a user-level issue, could also point to deeper vulnerabilities in the platform’s authentication protocols or its handling of user data. The rapid pace of feature development in consumer applications sometimes outstrips security review, creating opportunities for exploit. The company, when pressed, stated they are “investigating the reports thoroughly.”

The sheer diversity of threats—from application-level exploits like React2Shell to hardware-level concerns like the MCP leak and the pervasive threat of AI-assisted attacks—demands a holistic approach. Simply deploying more tools won’t suffice. It requires a fundamental shift in how we think about security, moving from perimeter defense to continuous verification and resilience at every layer of the digital ecosystem.

The ongoing battle against these emerging threats is directly tied to the broader AI race. As organizations race to deploy GenAI capabilities, they must concurrently bolster their defenses against AI-powered attacks. The next wave of cybersecurity innovation will likely be defined by how effectively we can leverage AI to defend against AI-driven adversaries, all while maintaining the integrity of our increasingly complex hardware and software supply chains.